Understanding Hallucination in AI: Why They Happen and How to Overcome Them

Summary

The article examines AI hallucinations, where generative models produce fluent but fabricated outputs. It distinguishes hallucinations from errors and misunderstandings, identifies root causes such as poor data quality, biased decoding, and unclear prompts, and highlights risks in healthcare and misinformation. It also outlines mitigation strategies including guardrails, structured inputs, fact-checking pipelines, and human oversight.

Key insights:

Fabrication Mechanism: Hallucinations generate confident but ungrounded information that appears credible.

Root Causes: Poor training data, biased generation methods, and ambiguous prompts drive false outputs.

High-Stakes Impact: Healthcare, legal, and media use cases amplify risks of undetected fabrications.

Bias Propagation: Imbalanced datasets can cause models to invent misleading patterns.

Technical Safeguards: Guardrails, adversarial training, and automated fact-checking reduce hallucination rates.

Human Oversight: Expert validation remains essential to detect and correct AI outputs.

Introduction

Artificial intelligence is transforming everything, from how we write and research to how we diagnose disease, navigate cities, and interact with the digital world. These systems, trained on vast amounts of data, possess an almost magical ability to comprehend language, recognize images, and solve complex problems. But lurking behind the polished facade of modern AI is a strange and sometimes unsettling phenomenon: hallucinations.

AI doesn’t dream in the human sense. But it can fabricate. It is capable of making things up, confidently and convincingly. In the world of artificial intelligence, a hallucination refers to when an AI model generates information that is not true, not supported by any data, or entirely fictional. These “hallucinations” may take the form of fake facts, invented quotes, incorrect citations, or completely fabricated people, places, or events. Sometimes they’re harmless. Sometimes they’re dangerous. They always raise important questions about how much we can, or should, trust intelligent machines.

Definitions

In the context of artificial intelligence, AI hallucination refers to the phenomenon where a generative model produces output that is syntactically or semantically plausible but factually incorrect, ungrounded, or entirely fabricated. The term “hallucination” is metaphorical. It draws on the analogy of a human perceiving something that is not real. Further, it highlights the model’s detachment from verifiable truth or objective reality.

Traditional machine learning errors are typically quantitative misclassifications (labeling a cat as a dog). However, hallucinations are qualitative. They occur when the model generates new information that appears confident and coherent. However, it yet lacks fidelity to the input, context, or ground truth.

In simpler terms, a hallucination is not just a mistake, but a fabrication that “looks right”. That is a falsehood masked by fluency.

Why Hallucinations Happen

AI models, including LLMs, are first trained on a dataset. As they consume more and more training data, they learn to identify patterns and relationships within that data, which then enables them to make predictions and produce some output in response to a user's input (known as a prompt). Sometimes, however, the LLM might learn incorrect patterns, which can lead to incorrect results or hallucinations.

There are many possible reasons for hallucinations in LLMs, including the following:

Poor data quality: Hallucinations might occur when there is bad, incorrect, or incomplete information in the data used to train the LLM. LLMs rely on a large body of training data to be able to produce output that's as relevant and accurate to the user who provided the input prompt. However, this training data can contain noise, errors, biases, or inconsistencies; consequently, the LLM produces incorrect and, in some cases, completely nonsensical outputs.

Generation method: Hallucinations can also occur from the training and generation methods used, even when the data set itself is consistent and reliable, and contains high-quality training data. For example, the model's previous generations might create bias, or the transformer might perform false decoding, both of which result in the system hallucinating its response. Models might also have a bias toward generic or specific words, which might influence the information they generate or lead them to fabricate their response.

Input context: If the input prompt provided by the human user is unclear, inconsistent, or contradictory, hallucinations can arise. While the quality of training data and training methods used are out of the users' control, they can control the inputs they provide to the AI system. By honing their inputs and providing the right context to the AI system, they can get it to produce better results.

Hallucination vs. Error vs. Misunderstanding

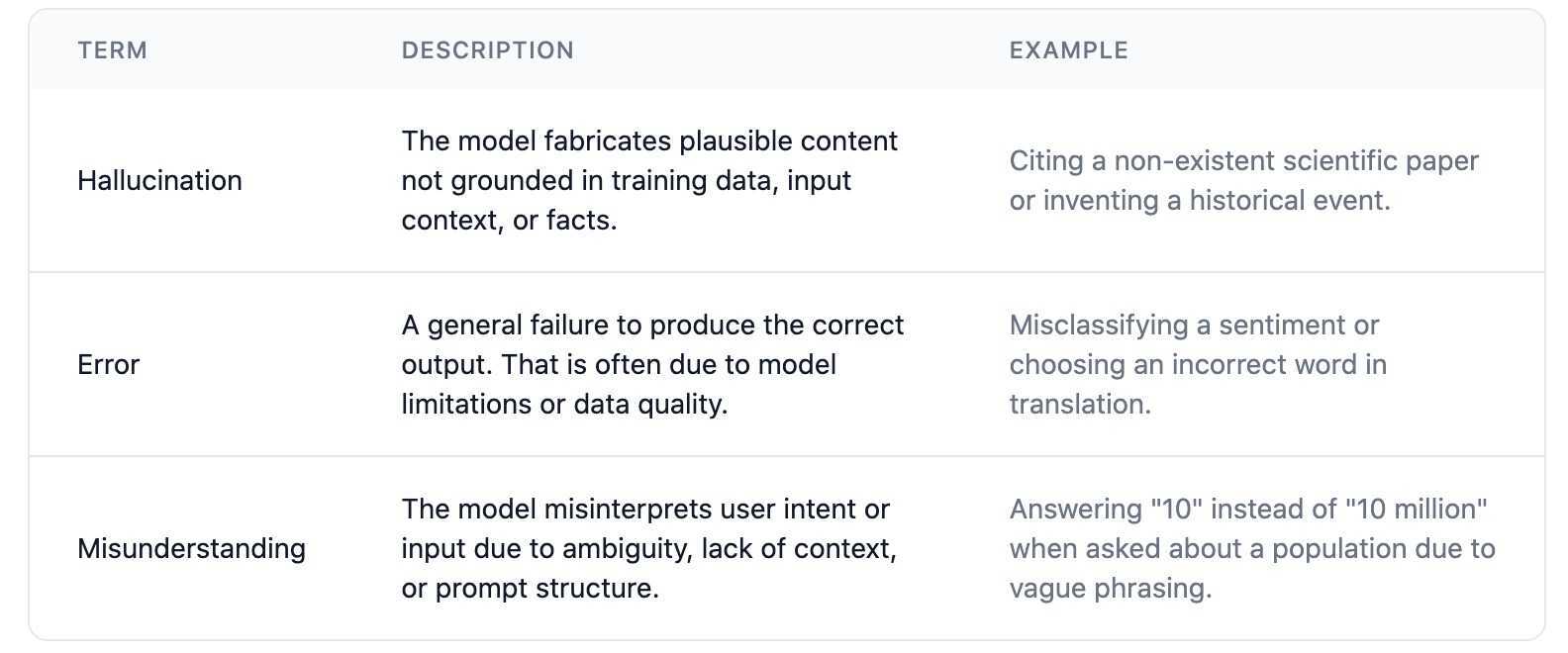

It is essential to differentiate between hallucination, factual error, and model misunderstanding. That is more important to know, more particularly in the context of large language models (LLMs) and other generative systems.

Errors and misunderstandings often arise from surface-level noise or poor input formulation. However, hallucinations reflect deeper limitations in how generative models represent, retrieve, and reason over knowledge.

Moreover, hallucination is particularly concerning because it evades detection. It does not “look” like a mistake to a casual observer. This is one reason hallucinations are dangerous in high-stakes applications like legal tech, medicine, or journalism.

Implications of AI hallucination

1. Healthcare Risks

AI hallucination can have significant consequences for real-world applications. For example, a healthcare AI model might incorrectly identify a benign skin lesion as malignant, leading to unnecessary medical interventions.

2. Spread of Misinformation

Hallucination problems can also contribute to the spread of misinformation. If hallucinating news bots respond to queries about a developing emergency with information that hasn’t been fact-checked, falsehoods can quickly spread and undermine mitigation efforts.

3. Input Bias

One significant source of hallucination in machine learning algorithms is input bias. If an AI model is trained on a dataset comprising biased or unrepresentative data, it may hallucinate patterns or features that reflect these biases.

4. Adversarial Attacks

AI models can also be vulnerable to adversarial attacks, wherein bad actors manipulate the output of an AI model by subtly tweaking the input data. In image recognition tasks, for example, an adversarial attack might involve adding a small amount of specially-crafted noise to an image, causing the AI to misclassify it. This poses serious security concerns, especially in sensitive areas such as cybersecurity and autonomous vehicle technologies.

5. Guardrails and Mitigation

AI researchers are constantly developing guardrails to protect AI tools against adversarial attacks. Techniques such as adversarial training, where the model is trained on a mixture of normal and adversarial examples, are helping shore up security issues. In the meantime, vigilance in the training and fact-checking phases remains paramount.

Preventing AI hallucinations

The best way to mitigate the impact of AI hallucinations is to stop them before they happen. Here are some steps you can take to keep your AI models functioning optimally:

1. Use high-quality training data

Generative AI models rely on input data to complete tasks, so the quality and relevance of training datasets will dictate the model’s behavior and the quality of its outputs. In order to prevent hallucinations, ensure that AI models are trained on diverse, balanced, and well-structured data. This will help your model minimize output bias, better understand its tasks, and yield more effective outputs.

2. Define the purpose your AI model will serve

Spelling out how you will use the AI model, as well as any limitations on the use of the model, will help reduce hallucinations. Your team or organization should establish the chosen AI system’s responsibilities and limitations; this will help the system complete tasks more effectively and minimize irrelevant, “hallucinatory” results.

3. Use data templates

Data templates provide teams with a predefined format, increasing the likelihood that an AI model will generate outputs that align with prescribed guidelines. Relying on data templates ensures output consistency and reduces the likelihood that the model will produce faulty results.

4. Limit responses

AI models often hallucinate because they lack constraints that limit possible outcomes. To prevent this issue and improve the overall consistency and accuracy of results, define boundaries for AI models using filtering tools and/or clear probabilistic thresholds.

5. Test and refine the system continually

Testing your AI model rigorously before use is vital to preventing hallucinations, as is evaluating the model on an ongoing basis. These processes improve the system’s overall performance and enable users to adjust and/or retrain the model as data ages and evolves.

Rely on human oversight

Making sure a human being is validating and reviewing AI outputs is a final backstop measure to prevent hallucination. Involving human oversight ensures that, if the AI hallucinates, a human will be available to filter and correct it. A human reviewer can also offer subject matter expertise that enhances their ability to evaluate AI content for accuracy and relevance to the task.

Detecting Hallucinations with Fact-Checking

One practical approach is to integrate automated fact-checking into AI pipelines. Below is a simplified Python example using external APIs to validate AI-generated statements:

This code demonstrates how developers can use external search APIs to validate statements, reducing the risk of hallucinations.

AI hallucination applications

While AI hallucination is certainly an unwanted outcome in most cases, it also presents a range of intriguing use cases that can help organizations leverage its creative potential in positive ways. Examples include:

1. Art and design

AI hallucination offers a novel approach to artistic creation, providing artists, designers, and other creatives with a tool for generating visually stunning and imaginative imagery. With the hallucinatory capabilities of artificial intelligence, artists can produce surreal and dream-like images that can generate new art forms and styles.

2. Data visualization and interpretation

AI hallucination can streamline data visualization by exposing new connections and offering alternative perspectives on complex information. This can be particularly valuable in fields such as finance, where visualizing intricate market trends and financial data facilitates more nuanced decision-making and risk analysis.

3. Gaming and virtual reality (VR)

AI hallucination also enhances immersive experiences in gaming and VR. Employing AI models to hallucinate and generate virtual environments can help game developers and VR designers imagine new worlds that take the user experience to the next level. Hallucination can also add an element of surprise, unpredictability, and novelty to gaming experiences.

Conclusion

Hallucinations in AI are not simply flaws but reminders of the complexity of human-like reasoning in machines. While they pose risks to trust and reliability, they also highlight areas where innovation and oversight are most needed. By combining technical improvements with ethical safeguards, society can ensure that AI evolves into a tool that informs and empowers rather than misleads.

Build AI You Can Trust

Walturn’s product engineering and AI teams design guardrailed, production-ready systems that minimize hallucinations.

References

Ibm. (2025, November 17). AI Hallucinations. IBM Think. https://www.ibm.com/think/topics/ai-hallucinations

Awati, R., & Lutkevich, B. (2024, October 11). What are AI hallucinations and why are they a problem? WhatIs. https://www.techtarget.com/WhatIs/definition/AI-hallucination

GeeksforGeeks. (2025b, July 23). What are AI Hallucinations? GeeksforGeeks. https://www.geeksforgeeks.org/artificial-intelligence/hallucination/

Tuhin, M., & Tuhin, M. (2025, July 13). What are AI hallucinations and why do they happen? Science News Today. https://www.sciencenewstoday.org/what-are-ai-hallucinations-and-why-do-they-happen

What is AI Hallucination? Causes & Prevention | AI Glossary. (n.d.). https://chipp.ai/ai/glossary/ai-hallucination